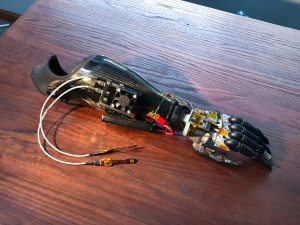

Photograph courtesy of the Neuroelectronics Lab, University of Minnesota.

A team at the University of Minnesota Twin Cities, along with industry collaborators, designed a small, implantable device that attaches to the peripheral nerve of a user’s residual limb to improve brain-controlled prosthetic systems. When combined with an artificial intelligence (AI) computer and a robotic arm, the device can read and interpret brain signals, allowing users to control the prosthetic arm using only their thoughts. The researchers say the device is more accurate and less invasive than other prosthetic control systems because it doesn’t require subtle muscle movements in the user’s residual limb, which can take months to learn.

“It’s a lot more intuitive than any commercial system out there,” said Jules Anh Tuan Nguyen, PhD. “With other commercial prosthetic systems, when amputees want to move a finger, they don’t actually think about moving a finger. They’re trying to activate the muscles in their arm, since that’s what the system reads. Because of that, these systems require a lot of learning and practice. For our technology, because we interpret the nerve signal directly, it knows the patient’s intention. If they want to move a finger, all they have to do is think about moving that finger.”

Nguyen has been working on the project for ten years with Zhi Yang, PhD, a biomedical engineering associate professor at the university, and was one of the key developers of the neural chip technology.

The project began in 2012 when Edward Keefer, PhD, a neuroscientist and CEO of Nerves Incorporated, Dallas, approached Yang about creating a nerve implant that could benefit amputees. They have since received funding from the Defense Advanced Research Projects Agency and conducted several successful clinical trials.

The developers also formed a startup called Fasikl—a play on the word “fascicle,” which refers to a bundle of nerve fibers—to commercialize the technology.

The research team says the system works so well compared to similar technologies because of the incorporation of AI, which uses machine learning to help interpret the signals from the nerve.

“Artificial intelligence has the tremendous capability to help explain a lot of relationships,” Yang said. “This technology allows us to record human data, nerve data, accurately. With that kind of nerve data, the AI system can fill in the gaps and determine what’s going on. That’s a really big thing, to be able to combine this new chip technology with AI. It can help answer a lot of questions we couldn’t answer before.”

To watch a video of the device in practice, visit the university’s YouTube channel.

The study, “A portable, self-contained neuroprosthetic hand with deep learning-based finger control,” was published in the Journal of Neural Engineering.

Editor’s note: This story was adapted from materials provided by the University of Minnesota.