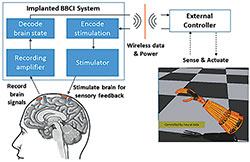

The BBCIs being developed at CSNE can both record from and stimulate the nervous system. They would also allow the brain to directly control prostheses or other external devices to enhance movement or reanimate paralyzed limbs. Graphic courtesy of the Center for Sensorimotor Neural Engineering.

The brain needs information from a fingertip, limb, or external device to understand how firmly a person is gripping or how much pressure is needed to perform everyday tasks. Now, University of Washington (UW) researchers at the National Science Foundation Center for Sensorimotor Neural Engineering (CSNE) have used direct stimulation of the human brain surface to provide basic sensory feedback through artificial electrical signals, enabling a patient to control movement while opening and closing his hand.

It’s a first step toward developing closed loop, bidirectional brain-computer interfaces (BBCIs) that enable two-way communication between parts of the nervous system, according to the researchers. BBCIs would also allow the brain to directly control external prostheses or other devices that can enhance movement-or even reanimate a paralyzed limb-while getting sensory feedback. The results of this research will be published in the October-December issue of IEEE Transactions on Haptics. An early-access version of the paper is available online.

The team of bioengineers, computer scientists, and medical researchers from the CSNE and UW’s GRIDLab used electrical signals of different current intensities, dictated by the position of the patient’s hand and measured by a glove he wore, to stimulate the patient’s brain that had been implanted with electrocorticographic (ECoG) electrodes-the signals are stronger and more accurate than sensors placed on the scalp, but less invasive than ones that penetrate the brain. The patient then used those artificial signals delivered to the brain to “sense” how the researchers wanted him to move his hand. However, whether this type of sensation can be as diverse as the textures and feelings that people can sense tactilely is an open question, said co-author and UW bioengineering doctoral student James Wu.

In the UW study, three patients wore a glove embedded with sensors that provided data about where their fingers and joints were positioned. They were asked to stay within a target position somewhere between having their hands open and closed without being able to see what that target position was. The only feedback they received about the target hand position was artificial electrical data delivered by the research team. When their hands opened too far, they received no electrical stimulus to the brain. When their hand was too closed-similar to squeezing something too hard-the electrical stimuli was provided at a higher intensity.

One patient was able to achieve accuracies in reaching the target position well above chance when receiving the electrical feedback. Performance dropped when the patient received random signals regardless of hand position, suggesting that the subject had been using the artificial sensory feedback to control hand movement.

Providing that artificial sensory feedback in a way that the brain can understand is key to developing prostheses, implants, or other neural devices that could restore a sense of position, touch or feeling in patients where that connection has been severed.

“Right now we’re using very primitive kinds of codes where we’re changing only frequency or intensity of the stimulation, but eventually it might be more like a symphony,” said co-author Rajesh Rao, CSNE director and UW professor of computer science & engineering. “That’s what you’d need to do to have a very natural grip for tasks such as preparing a dish in the kitchen. When you want to pick up the salt shaker and all your ingredients, you need to exert just the right amount of pressure. Any day-to-day task like opening a cupboard or lifting a plate or breaking an egg requires this complex sensory feedback.”

Editor’s note: This story was adapted from materials provided by the University of Washington.